Advertisement

The way machines understand language has changed a lot. A big part of that change is due to something called “attention mechanisms.” These aren't flashy tech gimmicks or fancy upgrades. They're more like a shift in how machines process words, sentences, and meaning.

Instead of treating every word equally, attention lets a model focus on what matters most. It mimics how humans read — not every word gets the same weight when we try to understand a sentence. This article breaks down the types of attention mechanisms, how they work, and what makes them different.

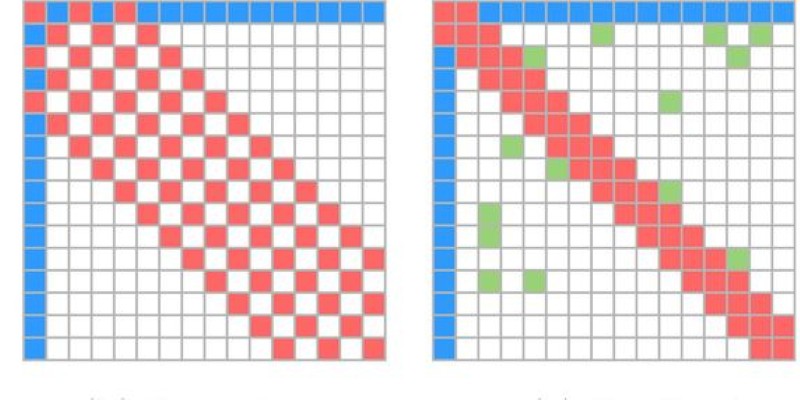

These two are among the earliest forms. They differ in how they select parts of input data.

Soft attention doesn’t really “choose” a word. It gives each word a score and looks at all words at once. It assigns more weight to important ones, but nothing gets left out entirely. This kind of attention is easy to work with. It’s smooth, trainable with standard methods, and doesn’t need guesses or tricks.

Hard attention is stricter. It chooses one or a few elements to focus on and ignores the rest. This can be more realistic but harder to train. It’s like closing one eye and trying to guess where to look. It uses sampling and sometimes needs special training methods like reinforcement learning. It’s faster but less predictable.

These terms come up often in tasks like translation.

Global attention checks all words in the input. It's useful when a word's meaning depends on the whole sentence. This is like scanning every word in a paragraph before picking one to focus on. It's accurate but slower, especially for long input.

Local attention limits the search. Instead of scanning everything, it looks near a target position. Think of this like reading a sentence but only focusing on a few nearby words at a time. It's quicker and works well when the context is close. In translation, if a word in English maps directly to a nearby word in French, local attention does the job.

These are common in older models like seq2seq with attention.

Additive attention uses a small neural network to decide which input words matter. It combines the current decoder state with each encoder state and passes them through a feed-forward network. It’s flexible and works well with small data.

Multiplicative attention, also known as dot-product attention, skips the extra network. It multiplies the current state with each input state to get a score. It's faster and more efficient, especially with matrix operations. This type is the backbone of transformers. Scaled dot-product attention — a variation — divides the result by a scaling factor to stabilize the values.

This is the core of transformer models like BERT and GPT.

Self-attention means a word in a sentence looks at all the other words — including itself — to determine what's important. It allows the model to understand how words relate to each other. For example, in "The dog chased its tail," self-attention helps connect "its" back to "dog."

Each word becomes a "query," and it checks every other word, which acts as "keys" and "values." The result is a weighted average of these values. This lets the model pick up on long-distance connections. It's fast, easy to scale, and allows parallel processing, unlike older methods that work step by step.

Multi-Head Attention

This is an extension of self-attention. Instead of doing one attention calculation, multi-head attention does several at once. Each "head" learns a different way to look at the sentence. One head might focus on subject-verb relationships, another on modifiers, and another on punctuation.

By combining these views, the model captures richer details. It's like asking several readers to explain a sentence and then combining their thoughts. This diversity in attention makes transformer models more precise and adaptable.

Used when two inputs are involved — such as in translation, where an English sentence gets converted into French.

In cross-attention, the query comes from one sequence (like the decoder), and the keys and values come from another (like the encoder). This is different from self-attention, where everything comes from the same sequence.

Cross-attention helps one part of the model figure out where to focus in another part. In translation, the French output uses English input to decide what to say next. It's how transformers keep the output grounded in the input.

One weakness of standard attention is that it looks at everything. This is slow and memory-heavy for long inputs.

Sparse attention solves that by reducing what each word can look at. It adds patterns, like focusing only on nearby words or words at regular intervals. Some models use learned patterns, and others use fixed ones. Sparse attention keeps the model fast while still catching important connections.

Popular models like Longformer and BigBird use this idea to handle long documents without losing speed or accuracy.

Some tasks involve structured input, like paragraphs made of sentences. Hierarchical attention handles this by applying attention at two levels.

First, it looks at the words inside each sentence. Then, it looks at sentences inside the paragraph. This two-step approach lets the model figure out what words matter and which sentences carry the key message. It's used in tasks like document classification and summarization.

Attention mechanisms changed how machines deal with language. Instead of reading blindly, they now know where to focus. Each type — from soft to sparse, from self to cross — brings a different tool. Some are good for speed. Some help understand meaning better. Some work best in short texts, others in long ones. What matters is picking the right one for the task. These mechanisms are now the backbone of modern models. They've made machines better at translating, summarizing, answering questions, and more. Not through magic — but by teaching them to pay attention the way we do.

Advertisement

A fake ChatGPT Chrome extension has been caught stealing Facebook logins, targeting ad accounts and spreading fast through unsuspecting users. Learn how the scam worked and how to protect yourself from Facebook login theft

Explore FastRTC Python, a lightweight yet powerful library that simplifies real-time communication with Python for audio, video, and data transmission in peer-to-peer apps

How to fine-tuning small models with LLM insights for better speed, accuracy, and lower costs. Learn from CFM’s real-world case study in AI optimization

Intel and Nvidia’s latest SoCs boost AI workstation performance with faster processing, energy efficiency, and improved support

Explore 8 clear reasons why content writers can't rely on AI chatbots for original, accurate, and engaging work. Learn where AI writing tools fall short and why the human touch still matters

Learn how business leaders can measure generative AI ROI to ensure smart investments and real business growth.

Learn how to use ChatGPT with Siri on your iPhone. A simple guide to integrating ChatGPT access via Siri Shortcuts and voice commands

Looking for beginner-friendly places to explore AI tools? Discover the top 9 online communities for beginners to learn about AI tools, with real examples, clear guidance, and supportive discussion spaces

Use ChatGPT from the Ubuntu terminal with ShellGPT for seamless AI interaction in your command-line workflow. Learn how to install, configure, and use it effectively

Discover the top AI search engines redefining how we find real-time answers in 2025. These tools offer smarter, faster, and more intuitive search experiences for every kind of user

How to print without newline in Python using nine practical methods. This guide shows how to keep output on the same line with simple, clear code examples

How to encourage ChatGPT safety for kids with 5 practical strategies that support learning, creativity, and digital responsibility at home and in classrooms